It is now over ten years since John Ioannidis published his essay on ‘Why most published findings are false’. This paper presented statistical arguments to remind the research community that a finding is less likely to be true if: 1) study size is small, 2) effect size is small, 3) a protocol is not pre-determined and not adhered to, 4) it is derived from a single research team. Methods to overcome this issue included establishing the correct study size, reproducing the results by multiple teams and improving the reporting standards of scientific journals.

So, have any of these suggestions been taken forward? There is an increase in the promotion of open science, a movement which supports increased rigour, accountability and reproducibility of research. In addition, there are increased requirements by some scientific journals to improve reporting standards. Whether or not these recommendations are followed or adhered to remains to be established. Evidence from a systematic review we conducted on the asymmetric inheritance of cellular organelles highlights the problems in basic science reporting and study design.

Of 31 studies (published between 1991 and 2015), not one performed calculations to determine sample size, 16% did not report technical repeats and 81% did not report intra-assay repeats. We need to educate our future scientists on study design and impose stricter publication criteria.

Impartial and robust evaluations of biomedical science are required to determine which new biomedical discoveries will be clinically predictive. We should be concerned by the lack of reproducibility in biomedical science because it is a major contributing factor in the low rate of clinical trial success (only 9.6% of phase 1 studies reach approval stage). Our ability to judge which discoveries will have real-life effects is crucial.

What can be learned from clinical research?

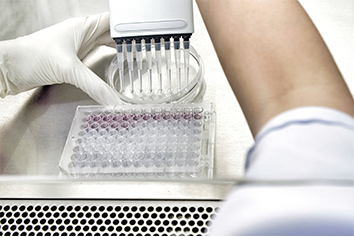

A lot can be learnt from clinical research. The publication of clinical research must follow strict reporting guidelines to ensure the transparency of research and decision making is based on unbiased evidence gathered by systematic review. Systematic reviews provide a methodology to identify all research evidence relating to a given research question.

Importantly systematic reviews are unbiased (not opinion-based) and are carried out according to strict guidelines to ensure the clarity of the results and their reproducibility.

They form the basis of all decision making in clinical research. Importantly the evidence gathered by the review is judged to ascertain its quality. This allows the review to present results graded according to the ‘best evidence’. Determining the quality of the clinical evidence is judged primarily according to the study design (randomisation, blinding of participants and research personnel etc). There are many risk of bias tools available, each appropriate to a different study design. The GRADE seeks to incorporate the findings of a systematic review alongside study size, imprecision, consistency of evidence and indirectness; providing a clear summary of the strength of evidence to guide recommendations for decision making.

It makes sense for decision making in biomedical research to be judged in a similar open and unbiased manner. Astoundingly, the choice of which biomedical discovery is suitable for further investment is usually an opinion-based decision. Big steps have been made to introduce systematic review to preclinical research. There are reporting guidelines for animal studies and risk of bias tools. The quality of these studies is again based on study design.

At a basic bioscientific level one must argue that judging the quality of evidence should focus on more fundamental aspects of the research: 1) how valid is the chosen model (or how well does it recapitulate the human disease of interest), 2) how valid is the chosen marker (or how well does it identify the target?) 3) how reliable is the result. Pioneering work by Collins and Lang has introduced tools to perform such judgments. These tools aim to directly address the issues raised by John Ioannidis. They aim to highlight the strengths and gaps in a given research base.

About the author

Dr Shona Lang has spent 8 years working in clinical epidemiology preparing systematic reviews, meta-analyses, rapid reviews, and health technology assessments to support policy making, licensing and reimbursement decisions in clinical research.

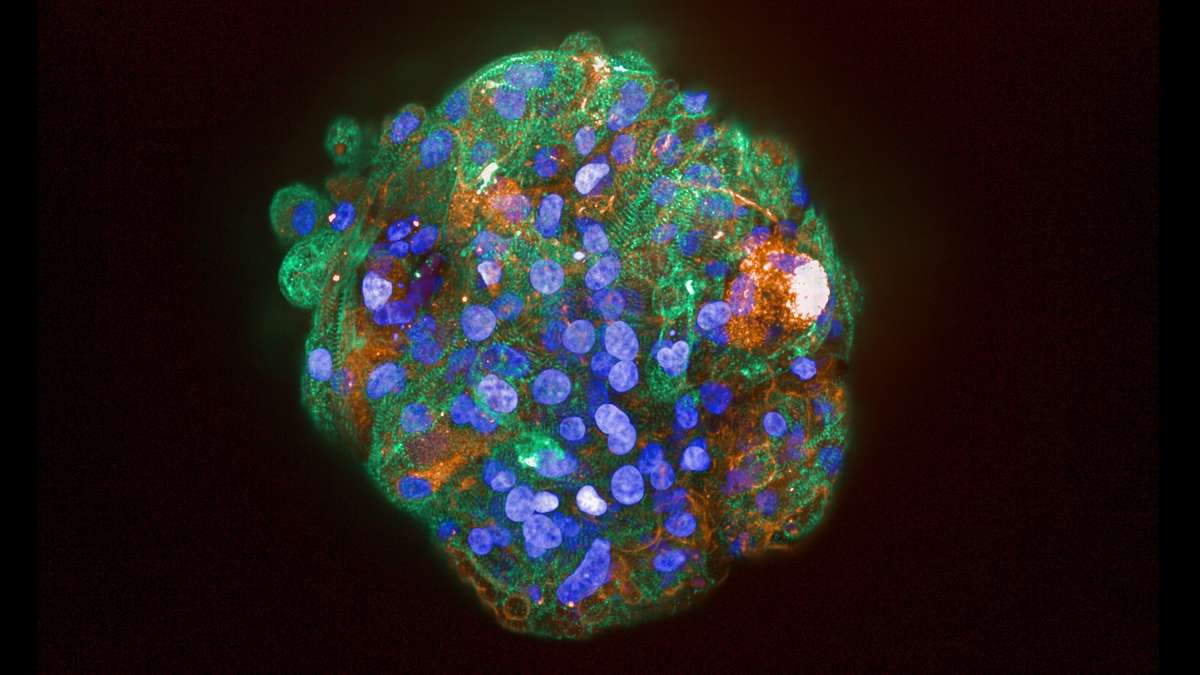

Prior to this, she was a Wellcome Fellow in cancer research and tissue development. Her focus was on alternative models to animal testing (human tissue culture, organoids, 3D models and stem cells). Using her expertise in both fields she is researching the value of evidence-based decision making in biomedical science and clinical translation and hopes to provide solutions to de-risk investment in this field.

To this end she has established QED Biomedical Ltd, which you can find on Twitter and LinkedIn, with her long-term collaborator Dr Anne Collins.

This article is from a series contributed by the UK drug discovery community. For more information read our disclaimer.