In recent years, machine learning and Artificial Intelligence (AI) have become widely popularised in all areas of modern life, and drug discovery is no exception.

Why AI?

The benefit of AI for detecting novel drug-target interactions, or even in silico design of novel drug-like compounds, is clear. The possible chemical search space of potentially synthesizable compounds is simply too large (anywhere between 1020 to 1060 molecules) for any experimenter to make and screen every possible drug-like molecule against every known druggable target in turn.

To put these magnitudes into context, through a rough calculation, 1060 molecules is greater than the number of individual H2O molecules within all the oceans on Earth (1046, approximately).

Machine learning provides one means to bypass this combinatorial issue. Essentially the goal here is to develop a computational activity classification model, which can:

- Correctly interpret the molecular structure of small molecules that are input into the model

- From prior experience (exposure to training data) be able to predict the complex relationship between the input small molecule and one or several known drug targets of interest

The output from the above steps can then be used to consult much more focused experimental drug synthesis and testing. Rather than testing all possible compound-target interactions experimentally, the machine learning algorithms can be used as an upstream tool to pinpoint exactly which areas of chemical space may yield the most promising results experimentally.

An enormous saving of both time and money.

The success of the above machine learning scheme relies heavily on the presence of sufficient (and good quality) examples of known drug-target relations, from which the algorithms can learn during an initial ‘training’ phase. In order to assess whether the algorithm has successfully learnt activity relationships, a typical strategy is to hide a fraction of these known examples (a ‘test set’) and to determine the success rate at which the algorithm can reproduce these hidden results.

This is comparable to how we ourselves learn – if I showed you several photographs of a newly discovered animal, your ability to correctly identify the same animal in a new photograph is likely to be related to how many photographs I’d shown you and also to how blurry the photographs were (the data quality).

Why let the algorithms learn?

Consider the following contrasting (non-learning) approach: For a hypothetical protein target of interest, suppose we feel confident that enough is known about the true substrate binding mode of the protein that we can devise a fixed rule set to identify suitable drug candidates, based on which chemical moieties are present or absent within each tested small molecule. By construction, the proposed chemical rule set would correctly identify some suitable drug candidates (it was designed that way), however:

- The rule set would be limited strictly to the singular target of interest

- The effectiveness of the proposed rule set would be directly related to how much (and correctness of the) knowledge we possess on function of that protein target

In contrast, modern AI-based approaches offer a far more dynamic paradigm. An individual chemical rule set is not specified, but rather an implicit rule set can be learnt by the model itself during exposure to labelled training data samples of known drug-target interactions.

Since an explicit rule set is not required per drug target, the model can also rapidly adapt to distinct protein targets, simply by exposing the model to labelled training data associated with different targets; in the contrasting ‘non-AI’ rule-based approach discussed above, this would require detailed crafting of a new rule set for each new target.

In addition, when new activity data comes available, the AI algorithms can be retrained or fine-tuned to account for this additional data source, without having to redefine a rule set from scratch.

Target prediction on large chemical libraries

As alluded to above, machine learning-based activity models provide a mechanism to rapidly and inexpensively screen compounds against a large set of possible target interactions. This capability is therefore of significant benefit to both vendors and purchasers of large chemical libraries.

A purchasable compound library may contain tens of thousands of (often diverse) individual chemicals that are available for experimental screening, often selected and grouped in a manner that is, from prior knowledge, expected to have the highest activity rate against particular targets of interest (e.g. kinases).

However, unless a pre-curated target-specific library exists for a customer’s new protein target of interest, there is no knowledge at hand to indicate which compounds are a worthy investment. Activity models provide a means of rapidly annotating these large chemical libraries against as many targets as the customer requires, thus optimising their success to expenditure ratio.

The distributor of the compound library equally benefits – the novel activity predictions provided by such models allow much more extensive tailoring of their libraries, to more efficiently re-annotate compounds as new activity information comes available through the scientific community (using retrained/updated models), and also to possibly deliver new target-specific collections, thus attracting broader customer interest.

There is an unavoidable caveat in the application of any activity model here. As previously stated, care must always be taken to remember that the performance (and therefore usefulness) of such activity models is crucially hinged on both the quality and quantity of the input training examples that any model learns from.

Ultimately, experimental verification is key for all novel drug-target activity predictions.

This is particularly pertinent in cases where activity predictions have been made outside the seen chemical space of the training data set. In the application to the chemical library annotations, such issues can be partially mitigated, however, since customer feedback from downstream experimental screening can provide a direct means to continuously re-validate the predictive performance of any applied machine learning approaches.

About the author

Dr Charles Bury is a Cheminformatics Data Scientist at Medicines Discovery Catapult.

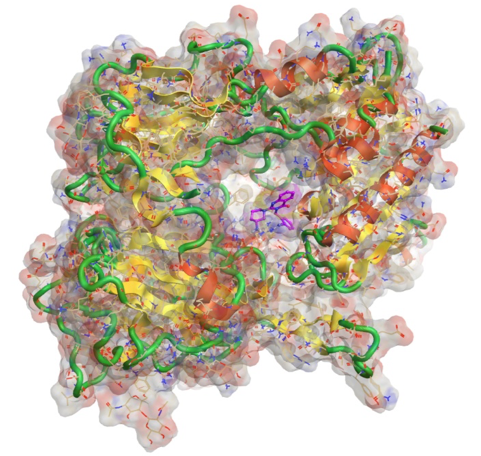

Before joining us, Charles completed a DPhil in Biochemistry at the University of Oxford, where his research focused primarily on the development of automated tools for radiation damage detection & correction during macromolecular X-ray crystallography (MX) experiments.